Getting In To A Causal Flow

May 20, 2020

Why Should You Care?

Most, if not all, business analytics questions, are inquiries of cause and effect. Designers estimate the extent to which UX changes effect user engagement metrics. Finance departments estimate the returns generated from costly corporate initiatives. Marketers fine-tune email newsletters and subscription services to estimate how their improvements can help to minimize churn rates. In statistics and econometrics literature, the term causal inference describes the quantitative process of answering such inquiries of cause and effect. As opposed to traditional inference problems, like prediction, that may take in a set of prior events and predict a distribution over a set of future events, causal inference is mainly concerned with generating and testing hypotheses, commonly referred to as structural models, of the relationships between the values of explanatory variables, or variables which change to incur a cause and outcome variables which change as the result of an effect. Causal inference techniques are largely concerned with discerning associative relationships (relationships between two variables for which a change in one variable is associated with a change in another) from causal relationships (relationships between the data describing a cause and an effect, for which the cause is an event that contributes to the production of another event, the effect).

Why Is Causal Inference Necessary?

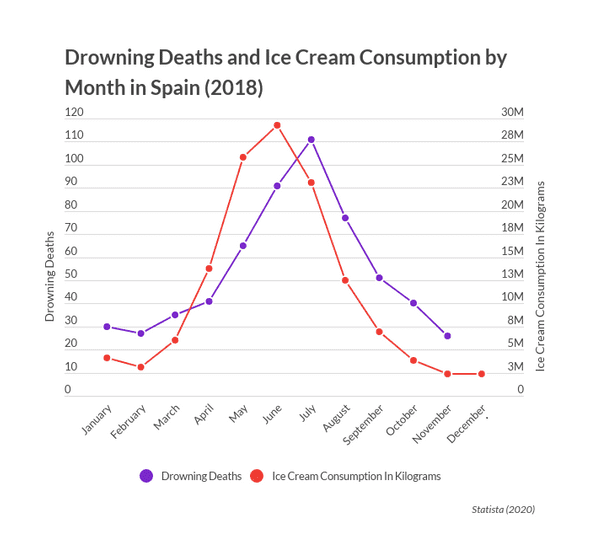

What is insufficient about traditional inference techniques, such as regression? Many are familiar with the adage “correlation does not imply causation”, but why is the distinction between associative and causal relationships so important? Consider the following time series featuring drowning deaths and ice cream consumption by month in Spain.

An Illustrative Example

These time series track quite closely together, with drowning deaths slightly leading ice cream consumption.

When Is “Traditional” Inference Good Enough?

It is illustrative to consider the inference tasks for which a presentation of the associative relationship is sufficient, in order to understand the kinds of tasks which are ill-suited for causal inference. For example, let’s say you’re an analyst given the prediction task of estimating drowning deaths in December of 2018, solely with data plotted in Figure 1. This can be done easily, by understanding that ice cream consumption and drowning are highly correlated and thus the small change in ice cream consumption from November to December likely corresponds to a small change in drowning deaths between the two months.

Similarly, let’s say you are an analyst given the classification task of estimating the probability that a particular death in Spain in the month of December is due to an unfortunate drowning, using data given from the time series plotted in Figure 1. Again it is easy to see that drowning deaths will likely continue to track with ice cream consumption and hardly change from November to December. If in Spain, about 37,000 people died in December of 2018, its clear to see without any additional information that the probability that a particular death were due to a drowning is likely quite low.

When is it not?

Ok, so if an understanding of associative relationships are sufficient for classification and prediction tasks, are there any tasks that require an understanding of causal relationships? I sure hope so; if not, I started this newsletter for nothing. The most common inference task that requires an understanding of causal relationships is a intervention recommendation task, or a task in which an analyst must decide how best to make an intervention in order to achieve a desired outcome. For example, imagine you are now tasked with constructing a plan of action to reduce drowning deaths in Spain by 50% going into the summer of 2020. If you were to simply use your understanding of the associative relationship between ice cream consumption and drowning deaths, you could quickly propose a plan to shut down all ice cream trucks in the entire country, as that would certainly correlate with a drop in drownings! However, providing such a recommendation is a ridiculous idea, and would surely correlate with you being out of a job by the end of the week.

Associative relationships are not enough to develop the causal understandings necessary to inform intervention recommendations. In order to understand which intervention should be made to improve an outcome, we must understand what causes the underlying processes generating our data. In our pedagogical example, changes in ice cream consumption are not causing changes in drownings simply because they are correlated. Similarly, changes in drownings are not causing changes in ice cream consumption. Rather, it is a third variable, temperature, that is responsible for the tight correlation relation you see in this data. In future posts, I will discuss causal discovery problems, for which one can leverage a class of algorithms to automatically discover such causal relationships. For now, your key takeaway should be as follows: Without causal inference techniques, you cannot mathematically verify that any event directly or indirectly causes any particular target event. Standard statistical analysis only enables analysts to infer associative relationships between variables. For this reason, analytical tools describing how exactly ice cream consumption and monthly drownings move together over time are not sufficient to inform policy decisions which aim to decrease drowning deaths.

Causal inference has served as the primary goal of academic researchers in a variety of fields who are concerned with evaluating the resultant success of particular interventions. It helps economists determine the expected effect of a minimum wage increase in a particular US state. It helps medical researchers estimate how much of a decrease in blood glucose level can be attributed to beginning an insulin treatment schedule. It helps poker-playing AI software determine the effect a particular action has had on its win probability. Causal inference can be a powerful tool for business analysts, who commonly query large repositories of customer information in order to inform a data-driven decision making process. In this blog series, I will discuss common techniques for utilizing causal inference in a business analytics setting, mainly those leveraging artificial intelligence and machine learning to swiftly build, evaluate, and tweak intervention recommendation models to unlock latent value from large-scale data sets.